My responsibilities revolve around providing the business with the data they need to make informed business decisions. One of those processes requires us to shift data from a Snowflake data warehouse housed in AWS to an Azure Synapse Analytics data warehouse.

We use Azure Data Factory as our ETL system, which has native drivers for Snowflake, but they only work under very limited situations, none of which suited this specific workflow. This meant we needed to use their odbc driver and to do that, we had to create a managed VM to contain the drivers and an Integration Runtime. This enables us to connect Snowflake directly to our Synapse system. Viola!

This setup, however, is not without its own quirks. You see we also use Azure Analysis Services, which requires ODBC, and some of its data sets also reside on Snowflake. This starts to put a significant amount of pressure on the VMs that act as a gateway, so we began looking for alternatives to moving data, to free up the VMs for cube builds only.

Snowflake allows you to export any table as a series of .gzipped files with the copy into command. You either copy into Snowflake or copy into a set of files. To do this required a script that would normally be run on a machine with the ability to connect to Snowflake. There was no easy way to coordinate this. Originally, we decided to have the job run as the end of a nightly process on Snowflake. We extended the job to push the table to a set of files in an Azure connected storage account and then call our event hub to signal the job is complete. That event would trigger a logic app, which would then call a Data Factory Pipeline that would ingest those files using a feature of Synapse called Polybase.

Polybase is the feature that Synapse uses to push/pull files (like the copy into command). Woot! We have bypassed the VM, but all was not good. Polybase gives incredible performance gains but is very finicky and expects the file to be formatted in some special way before it can ingest it. Even a simple '|' delimited file was copied to a set of staging files and then ingested. This was a dead-end, that is until the new Script Object became available in the Azure Data Factory.

Now we could run that exact command in our pipeline, which gave us more fine-grained control. Part 1. Part 2 was sneaky.

I wanted to see just what Polybase was doing with these files and it turns out to be something incredibly simple.

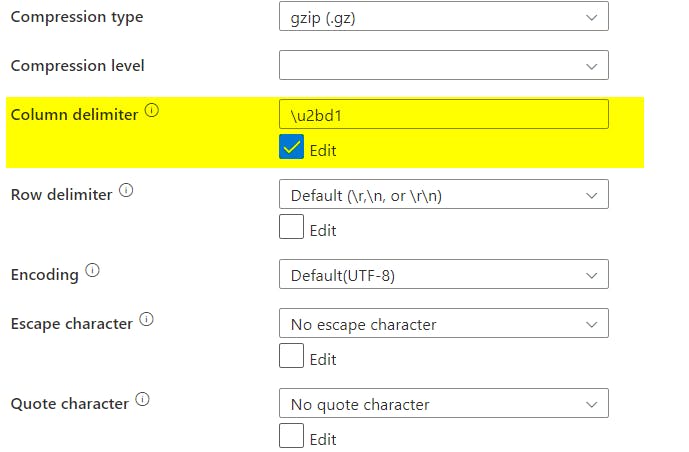

Polybase is simply replacing whatever delimiter you use in the source file with: \u2bd1

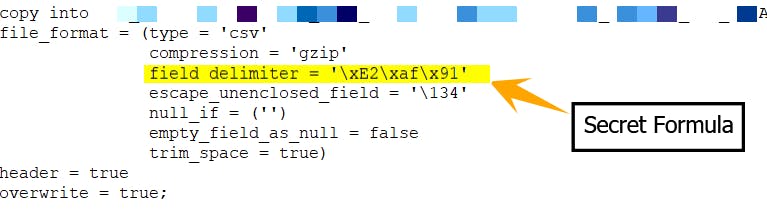

That seemed simple enough, but it turns out for Snowflake you need to tell it to use: \xE2\xaf\x91 as the delimiter.

This looks script object looks like this:

The source of the copy object looks like this:

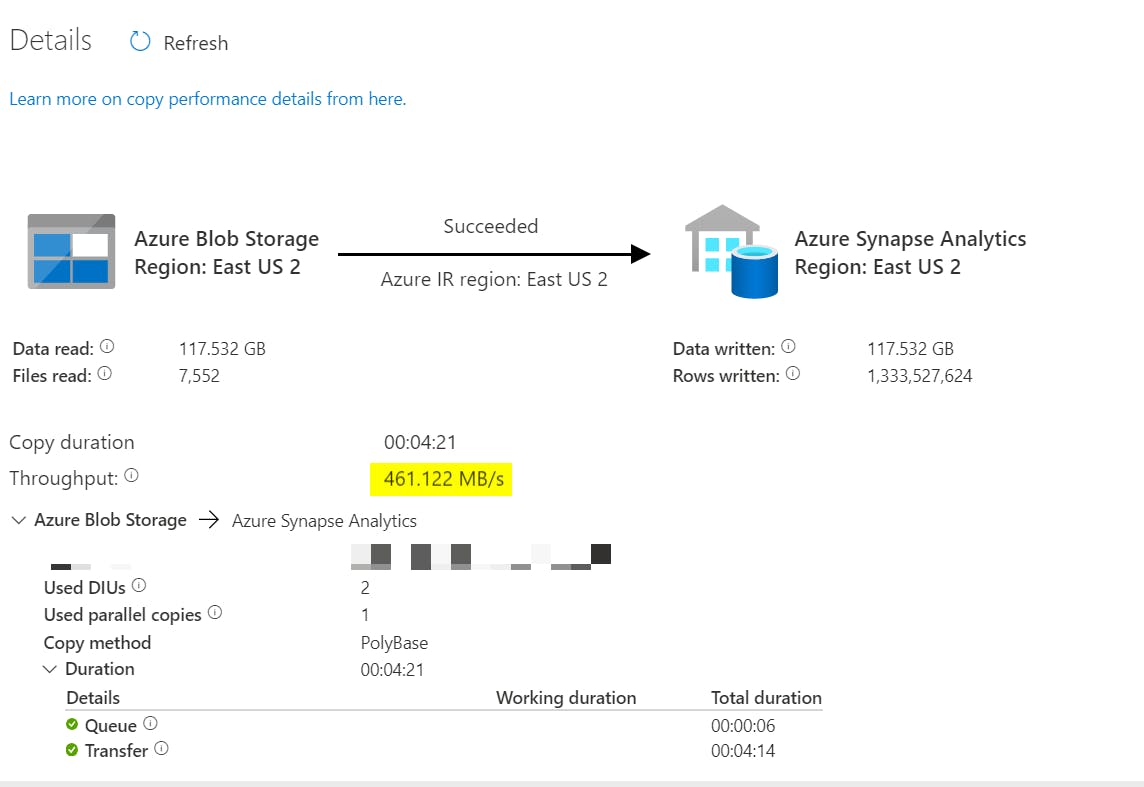

What type of performance do you get?

What type of performance do you get?

461MB/s that is 100 times faster than we were getting with an odbc connection through a managed VM. Done!

You can use this trick to improve the ingest speed of any csv file.spee